Home > backend > Network Load Balancing: Concepts, Implementation, and Best Practices

Network Load Balancing: Concepts, Implementation, and Best Practices

Load balancing is the process of distributing network traffic efficiently across multiple servers to ensure no single server becomes overloaded.

By :Thomas Inyang🕒 21 May 2025

Introduction

Have you ever wondered how major websites handle millions of visitors simultaneously without crashing? Or why a streaming service rarely buffers even during peak hours? The answer lies in a critical networking technology called load balancing.

Load balancing is the process of distributing network traffic efficiently across multiple servers to ensure no single server becomes overloaded. Load balancing is the unsung hero behind the scenes of our digital experiences, silently ensuring that web applications remain responsive, reliable, and resilient.

Whether you're a network administrator checking performance issues, an IT professional studying for your CCNA certification, or a business owner looking to improve your online services, understanding load balancing is essential in today's interconnected world.

This article will walk you through the following:

- What load balancing is.

- Load-balancing techniques.

- Types of load balancers and their application.

- Load balancing algorithm , implementation, monitoring, and trouble-shooting

Fundamentals of Network Load Balancing

Network Load Balancing (NLB) is a technology that acts as a traffic director for your network. Imagine a busy intersection with a skilled traffic officer ensuring vehicles move efficiently in all directions—that's essentially what a load balancer does for your data.

When users request access to your application or website, the load balancer intercepts these requests and intelligently redirects them to the most appropriate server based on factors like current server load, availability, and defined routing rules.

Why Load Balancing Matters

Load balancing serves several critical functions in modern networks:

- High Availability: By distributing traffic across multiple servers, load balancers ensure that if one server fails, traffic is automatically redirected to another that is functioning, preventing downtime.

- Scalability: As your traffic grows, you can easily add more servers to your resource pool, and the load balancer will automatically include them in its distribution algorithm.

- Efficiency: Resources are utilized optimally, with traffic distributed to prevent any single server from becoming a bottleneck.

- Performance: Users experience faster response times because their requests are directed to the servers best equipped to handle them quickly.

Beyond traffic distribution, load balancers play a crucial security role by acting as a buffer between clients and your application servers. They can help mitigate DDoS attacks, perform SSL termination, and hide your server infrastructure details from potential attackers.

Load Balancing Technologies and OSI Model

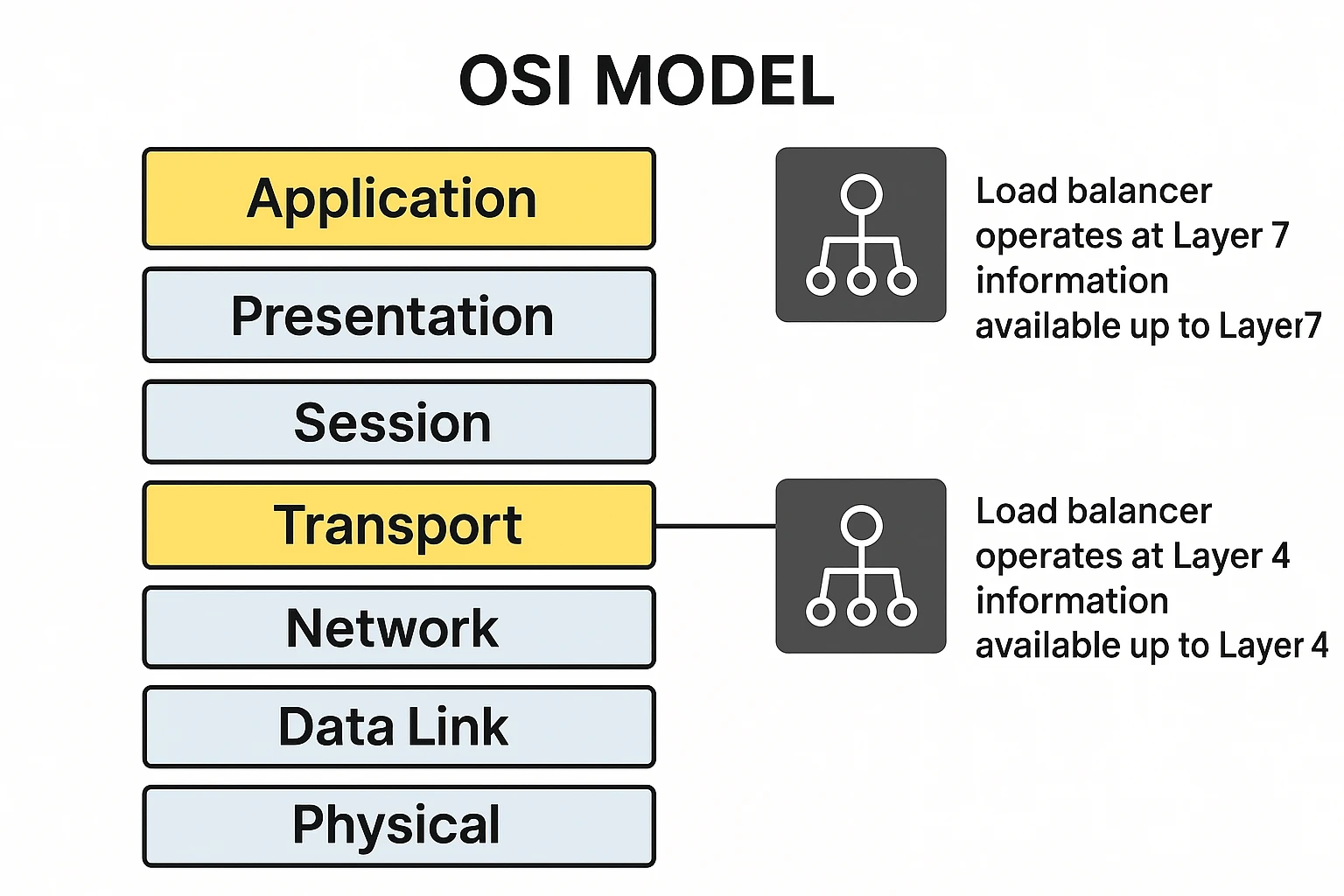

Load balancers operate primarily at different layers of the OSI model, leading to their classification as either Layer 4 (Transport layer) or Layer 7 (Application layer) load balancers.

Layer 4 Load Balancing

Layer 4 (TCP) load balancing works at the transport level, dealing primarily with TCP and UDP protocols. These load balancers make routing decisions based on IP addresses and TCP/UDP port numbers without inspecting the actual content of the packets. Layer 4 load balancers are faster and less resource-intensive, unable to make decisions based on the content of the request, and ideal for simple, high-performance scenarios where content inspection isn't necessary.

Layer 7 Load Balancing

Layer 7 load balancing operates at the application level, capable of inspecting the actual content of the HTTP request. This operation enables more sophisticated routing decisions based on URL patterns, HTTP headers, cookies, or other application-specific data. This level of balancing can route based on the content of the request (e.g., sending API calls to specific servers), perform SSL termination, handle content caching, and provide more advanced health checking.

HTTP operates at Layer 7 (Application), while TCP operates at Layer 4 (Transport). This distinction explains why HTTP load balancing provides more sophisticated routing capabilities than TCP load balancing, as it has access to higher-level application data.

Types of Load Balancers and Their Applications

While categorizations may vary, the four primary types of load balancers are as follows:

- DNS Load Balancers

- Network/Layer 4 Load Balancers

- Application/Layer 7 Load Balancers

- Global Server Load Balancers (GSLB)

Let's explain each in more detail:

DNS Load Balancing

DNS load balancing distributes traffic at the domain name resolution level. When a user tries to access your website, the DNS server returns different IP addresses from a pool in a round-robin fashion or based on geographic location.

Also, the DNS load balancing technique is when multiple records with the same domain name point to different server IP addresses. When clients request the domain, they receive different IP addresses in a rotating sequence, effectively distributing the load across multiple servers.

Network Load Balancers

Network Load Balancers (NLB) typically operate at Layer 4 (Transport Layer), making routing decisions based on IP protocol data, IP addresses, and TCP/UDP ports. This balancer distributes TCP and UDP traffic across multiple servers based on network-level information without examining the content of the packets. This makes them extremely fast and efficient for high-throughput scenarios.

Application Load Balancers

Application load balancers work at Layer 7, making routing decisions based on the content of the HTTP request, such as URL paths, headers, and cookies. This type of load balancer allows for more sophisticated traffic distribution based on what the client is actually requesting.

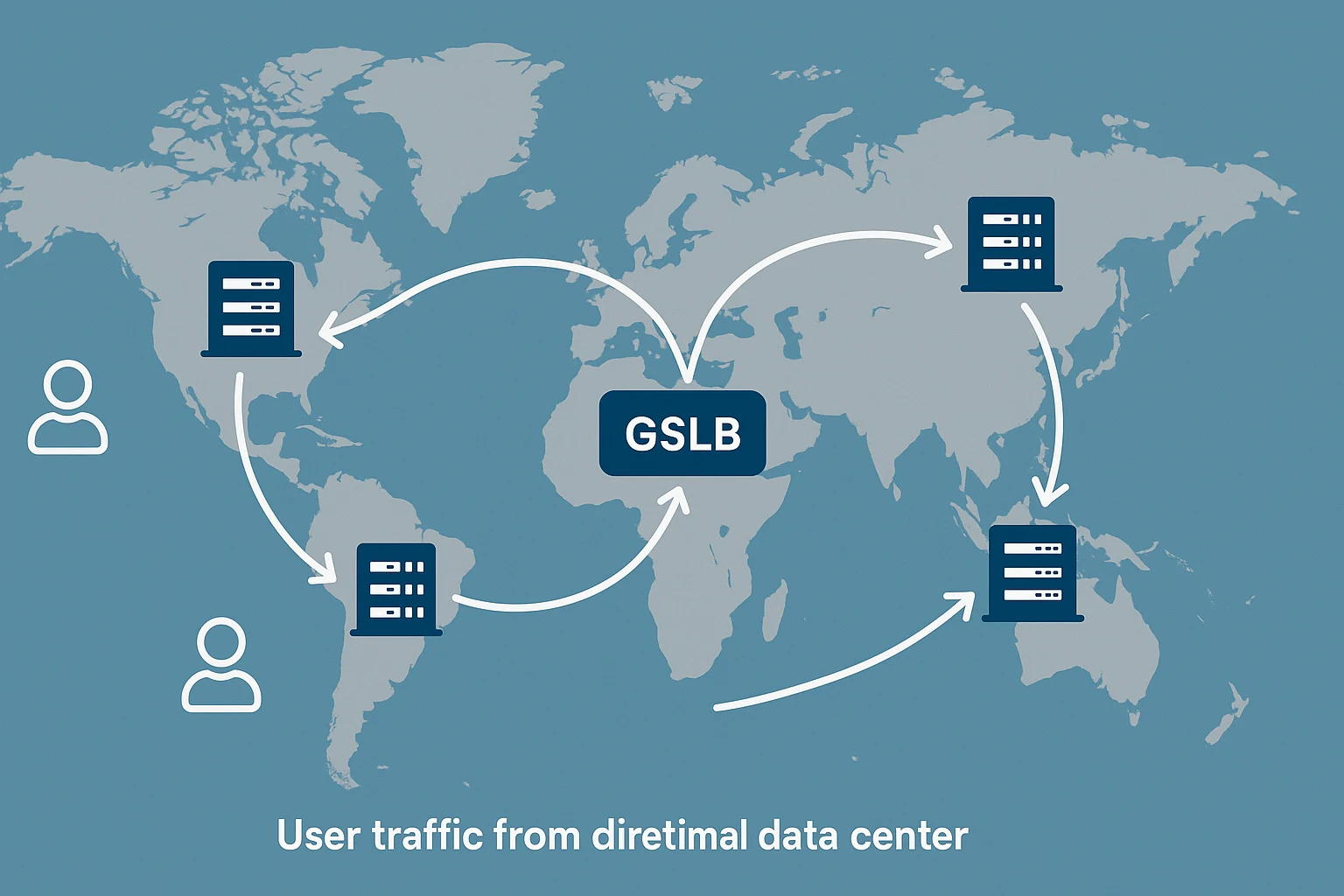

Global Server Load Balancers

GSLBs distribute traffic across multiple data centers or geographical regions, often incorporating both DNS techniques and application-aware routing to direct users to the nearest or most available data center.

What is F5 Networks and BIG-IP in Load Balancing

F5 Networks is a leading provider of application delivery networking technology, best known for its BIG-IP platform. F5 specializes in load balancing, application security, and traffic management solutions that ensure applications are always secure, fast, and available.

The name originated from the company's first product, which was designed to handle big amounts of IP traffic. The BIG-IP platform has since evolved into a comprehensive suite of application delivery and security services. F5's architecture is described as a "full proxy" because it terminates client connections and establishes separate connections to the servers. This allows BIG-IP to inspect, manipulate, and optimize traffic in both directions, offering greater control and security than pass-through alternatives.

See Also: How to Choose the Right Styling Approach for Your Web Application.

F5 is not primarily a firewall, but its BIG-IP includes the Advanced Firewall Manager (AFM) module, which provides full-featured network firewall capabilities. F5 can function as a web application firewall (WAF) through its Application Security Manager (ASM) module and Access Policy Manager (APM), which provides comprehensive secure remote access and VPN capabilities.

Also, F5 BIG-IP includes a DNS module called Global Traffic Manager (GTM), now known as DNS, which provides advanced DNS services, including global server load balancing and DNS security.

Load Balancing Algorithms and Methods

Implementing load balancing requires choosing appropriate distribution algorithms based on your specific needs. These algorithms determine how traffic is allocated across your server pool. While there are many algorithms, load-balancing methods can broadly be categorized as static (predetermined rules regardless of current conditions) and dynamic (adjusting based on real-time server performance and health).

Here are the most common load balancing algorithms:

- Round Robin: Requests are distributed sequentially across the server pool.

- Weighted Round Robin: Similar to round robin, but servers with higher capacity receive proportionally more traffic.

- Least Connection: New requests go to the server with the fewest active connections.

- Least Response Time: Directs traffic to the server with the lowest response time and fewest active connections.

- IP Hash: Uses the client's IP address to determine which server receives the request, ensuring the same client always reaches the same server.

See Also: Modularizing Your Application.

Load balancers typically support TCP/UDP at Layer 4 and HTTP/HTTPS at Layer 7. The specific protocol used depends on the type of application being load balanced and the level at which the load balancer operates.

These load-balancing algorithms are mathematical formulas and logical rules that determine how incoming traffic is distributed across multiple servers, with options ranging from simple round-robin distribution to complex methods that consider server health, response times, and connection loads.

Implementing Network Load Balancing

Network Load Balancing is ideal when you need to do the following:

- Ensure high availability for critical applications

- Handle traffic spikes without service degradation

- Distribute load across multiple servers for better performance

- Perform server maintenance without downtime

- Scale your application horizontally by adding more servers

NLB is commonly implemented in the following setup:

- Web application environments

- API services

- Database clusters

- Microservice architectures

- Enterprise applications with high availability requirements

- Content delivery networks

The maximum number of servers in one NBL cluster varies by platform. Microsoft's NLB can support up to 32 nodes in a single cluster, while cloud providers and enterprise solutions like F5 can support significantly more.

Please Note: A cluster is a broader concept referring to a group of servers working together as a single system, often for high availability and sometimes sharing storage. NLB is specifically focused on distributing network traffic across multiple servers, and it's one of several technologies that might be used within a clustering solution.

How to Monitor and Troubleshoot Load Balancers

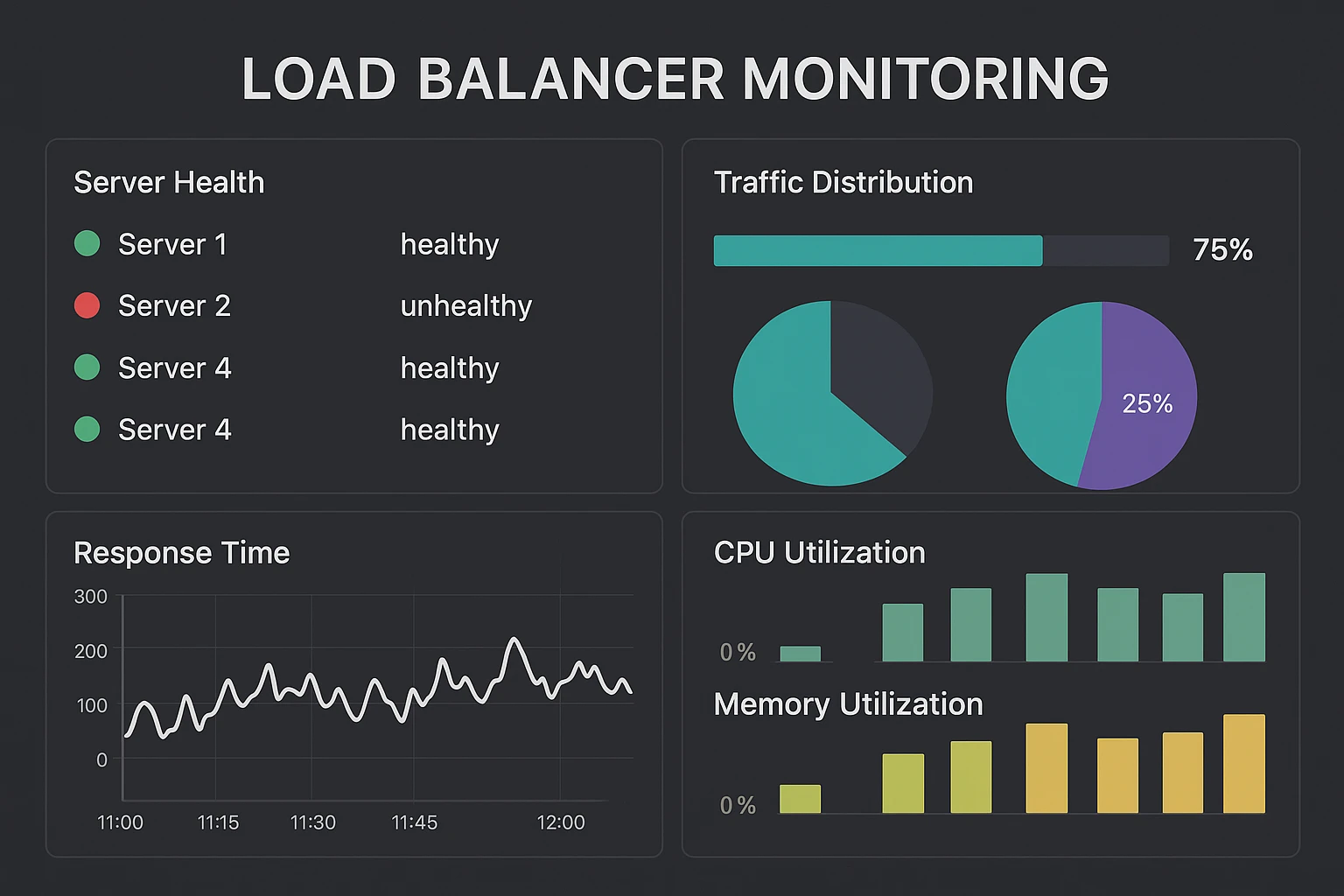

To verify your load balancing is functioning correctly, you need to do the following:

- Review the status of automated health checks for each server in the pool.

- Analyze logs to confirm requests are being distributed according to your configured algorithm.

- Monitor response times across servers to ensure consistent performance.

- Check the number of connections handled by each server to confirm balanced distribution.

- Temporarily disable servers to verify traffic automatically redirects to healthy nodes.

To turn off load balancers when not in use varies by platform but generally involves the following:

- For hardware appliances: Using the management interface to disable the virtual server or service

- For software solutions: Stopping the load balancing service or application

- For cloud services: Disabling the load balancer through the cloud provider's console

Comparing Load Balancing Solutions

Making the right choice for a load balancer depends on your specific requirements, but leading solutions include:

- Hardware: F5 BIG-IP, Citrix ADC (formerly NetScaler), A10 Networks

- Software: HAProxy, NGINX Plus, Kemp LoadMaster

- Cloud Services: AWS Elastic Load Balancing, Google Cloud Load Balancing, Azure Load Balancer

When evaluating load balancers, consider:

- Performance requirements and expected traffic volume

- Protocol support (HTTP/HTTPS, TCP/UDP, WebSocket)

- Advanced features needed (SSL offloading, WAF, content caching)

- Budget constraints

- Management interface and ease of configuration

- Monitoring and reporting capabilities

FAQ About Network Load Balancing and Other Related Technologies

What is the difference between routing and load balancing?

- Routing determines the path data takes between networks to reach its destination, focusing on network-to-network communication.

- Load balancing distributes traffic among multiple servers within the same application or service, focusing on optimizing resource utilization and availability.

Are proxy and load balancer the same? While they share some functionality, they're not the same:

- A proxy server primarily acts as an intermediary for client requests, often focusing on caching, filtering, or providing anonymity.

- A load balancer specifically distributes traffic across multiple servers to optimize resource utilization and ensure availability.

Many modern load balancers incorporate proxy functionality, and some proxy servers offer basic load balancing features, which contributes to the confusion.

Does router do load balancing?

Yes, many enterprise routers can perform basic load balancing across multiple WAN links using techniques like Equal-Cost Multi-Path (ECMP) routing. However, these routers differ from application load balancing and typically offer fewer features and less sophisticated health monitoring than dedicated load-balancing solutions.

Does OSPF load balance?

Yes, OSPF (Open Shortest Path First) can perform equal-cost load balancing when multiple paths to the same destination have identical cost metrics. Can OSPF do load balancing? It supports equal-cost multi-path (ECMP) routing, allowing traffic to be distributed across multiple paths with the same cost.

Conclusion

Network load balancing is no longer just for enterprise applications—it's a necessity for any organization delivering reliable digital services. From ensuring high availability to optimizing performance and enabling scalability, load balancers serve as the traffic directors of the modern internet.

Whether you're implementing Microsoft's NLB for a Windows environment, leveraging F5's BIG-IP for enterprise-grade application delivery, or utilizing cloud-based load balancing services, this article will help you make informed decisions about your network architecture.

Proper monitoring, regular health checks, and continuous optimization are essential to maintain peak performance. As your application needs evolve, don't hesitate to reassess your load balancing strategy to ensure it continues to meet your requirements.

By implementing the right load balancing solution for your specific needs, you're taking a critical step toward building a resilient, high-performance application infrastructure capable of delivering consistent user experiences even under heavy load.

Please Share